Big business

MaxMine captures, processes, and analyzes data from mining equipment at open-pit mine sites in Australia and Africa

These are enormous excavation projects that involve moving thousands, if not millions, of tons of rock and ore using some of the largest and most powerful machines on the planet. MaxMine captures the operational data generated by these multi-story mining trucks—such as the time it takes for them to travel across the site, and whether they exceeded site speed limits—to help guide operators toward improved efficiency and performance.

Theo Visan, Senior Analytics Engineer at MaxMine, explained: “Every site, no matter what they're mining, has to move payload. And they are going to want to see data showing how long it takes for a truck to be loaded in the pit, how quickly that material is transported out, how fast operators are completing a circuit, how safely drivers are taking their corners, and so on.”

“The sheer scale of these projects means that any given analysis might be a couple of million to tens of millions of dollars of value for our clients,” added Richard Jones, MaxMine’s Head of Analytics and Support. “That’s just the industry that we're in.”

Embedding data in the mining sector

MaxMine’s business operates with “industrial-scale internet of things (IoT)” equipment, which presents its unique set of challenges.

“We're dealing with mine sites all over the world, some of which are in very remote areas—the middle of the desert in remote Australia or across Africa,” said Theo. “So getting data from there up to the cloud is our first challenge.”

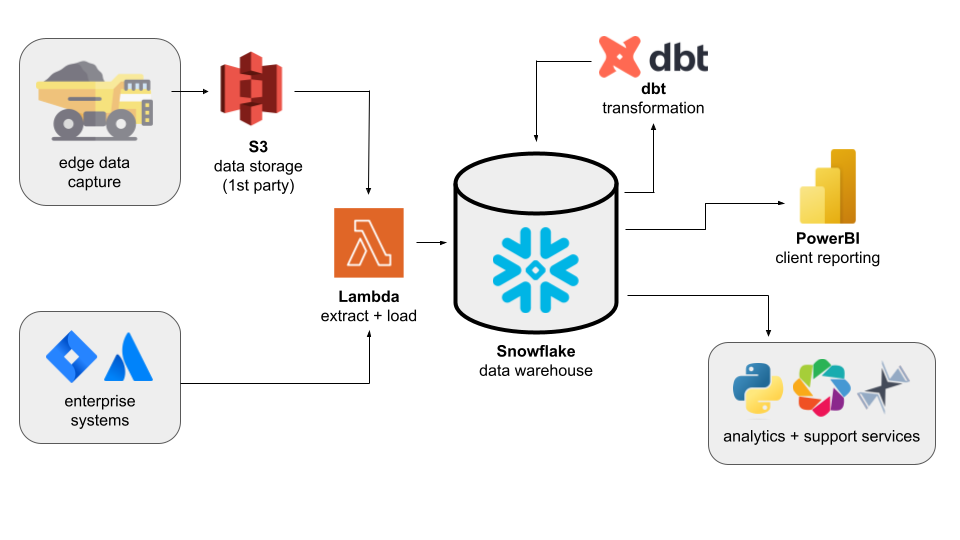

MaxMine uses AWS as the basis for its operations, with proprietary data compression methods and an extensive data processing pipeline running in the cloud. Algorithms automatically classify activities so that analysts can see what the equipment is doing without the need for operators to press buttons or manually log anything.

“The automation is critical,” explained Richard. “Nobody can game the system and it yields high-quality data.”

From there, the MaxMine team feeds the collected information back to the mine site in two ways. They 1) provide Power BI reports on key metrics—useful activity vs. idle-time, operator scoring, asset health monitoring—which run through Snowflake and dbt, and 2) publish a near-live map on their website that allows superintendents to track operations, view useful properties (vehicle speeds, engine load, payload carried, etc.), and review incidents.

A very manual data workflow

MaxMine’s current data workflow and stack contrast with their previous data pipelines, which involved little in the way of automated processing. The team used to have a major issue with how it aggregated and collectively analyzed data from such a large number of different machines.

They utilized a batch-based process that produced many files stored in many places, depending on which systems were creating them and which equipment the data originated from. This meant they were stored in a bunch of different formats, time zones, and locations.

“As an analyst, you would have to wrangle all of those files together by manually downloading them straight out of S3 buckets. Then, you would have to unpack and transform all of your different files to make sure that they were in the same format, time zone, and all that sort of nonsense.

“Before dbt we did a lot of manual data manipulation,” said Theo.

On top of messy formatting and documentation, the team’s ability to easily analyze their data was further complicated by a lack of historical data storage capabilities. This made performing even relatively simple queries—such as how much total tonnage a client had produced over the past 12 months—time-consuming affairs.

“You could do it, but it required manually downloading hundreds or even thousands of different files,” winced Theo. “We had a whole bunch of loose Python scripts that were just talking to S3 buckets, grabbing random files, and storing them on your local system. Maintaining them was a nightmare.”

Encountering dbt

With MaxMine’s client list and global presence growing, the analytics team needed a solution that would reduce the complexity and manual work in their data pipeline. The answer came in the form of dbt, which Richard first discovered through the Data Engineering Podcast.

“There was an episode on dbt,” he said. “It was so impressive that I lobbied our executive team to get dbt Cloud and Snowflake.”

“Initially it was just a trial to see how effective the technology was,” continued Theo. “But as soon as they had it up and running the team all said ‘this is it!’ They loved it from day one.”

MaxMine’s initial use case for its analytics data store— its Snowflake and dbt setup—was to combine, transform, and standardize incoming data streams. With their data consolidated in a single location, the analytics team could unpack it in an easily digestible format.

“We’re now able to do analysis that used to take weeks in days,” said Theo. “It's a very win-win set-up. If we're able to perform analysis more quickly, it's more efficient for us in terms of the people we need and it's better for our clients because they get actionable insights, faster.”

Lowering the skill floor

Introducing dbt Cloud into the team’s data stack had benefits that extended beyond increased speed and efficiency. The new system also lowered the skill floor and requirements for MaxMine’s growing team of analysts to be productive.

“Previously we required someone with SQL skills, Python skills, and a suite of other coding experience to be able to handle even the simpler type of queries that we might get,” noted Theo. “Now, so long as someone understands the fundamentals, they can very easily hop in and contribute. We're able to get our support agents more easily involved in the actual analysis process, too.”

According to Richard, this has helped bring about “a paradigm change” for the team.

“Up until we had this set of tools, our analysts and support staff had PhDs in physics and engineering,” he said. “Now we can hire graduates.

“Before, we had to have conversations about how to use Python in creative ways to query our data lake. Now we don't even talk about how to query the data—or we very rarely need to. It’s a change in kind rather than degree.”

Saving clients money with improved reporting

Another major advantage of MaxMine’s new stack is that the team could move to more modern analytics and reporting tools.

“We didn't have the capability of using actual BI before,” said Theo. “Now, we have a set of standard BI reports as well as custom BI reports as we create on a per client basis.”

The ability to provide standard and custom reports can have a real impact on clients’ operations.

“Some mine sites get paid on how many tons they move per hour. And ‘per hour’ can mean the number of hours that the equipment is running. However, if you're in the 45°C - 113°F - desert, you prefer to stay in the cab and keep the air conditioning running rather than walking through the heat to a crib hut.”

Before MaxMine’s analysis, it was common for drivers to keep their trucks’ engines running, even when they were waiting for as long as two hours. Essentially, the vehicles were being used as huge air conditioners.

“Considering that the engines for these things are 16 cylinders, 2000 kilowatts, and four turbos—the kind of thing you use as backup generators for a small city—using them as air conditioners for one person is not very efficient.”

On top of being wasteful, keeping the engines idling also increased the number of work hours registered, decreasing the mine’s tons-per-hour rate and resulting sales.

By monitoring the idle time in various locations where the operators have a temperature-regulated building they can use, MaxMine was able to identify excessive usage of equipment for air conditioning and reduce the total hours used by five percent—a 12.5-hour reduction in idle time per day. In the first six months after MaxMine’s recommendations were implemented, their client saved more than 60,000 liters of fuel.

Looking ahead: data as the center of operations

The MaxMine team’s future plans are, in a few words “bigger and better,” laughed Theo.

“There are two focus areas,” he continued. “First, the wider business is starting to see more value in the analytics data store, and second, it's becoming more central to how we operate.”

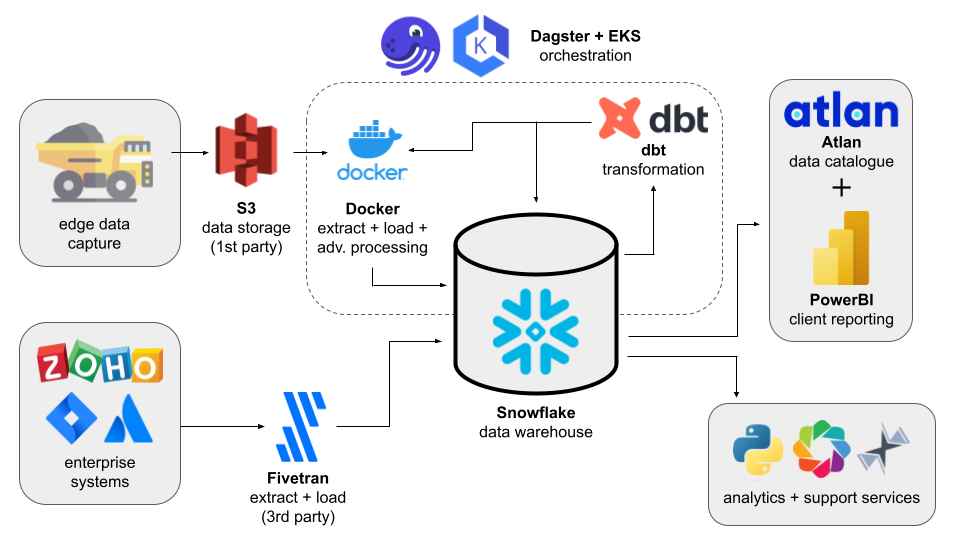

As the team continues to mature its stack and add additional tools, it's looking to transition MaxMine’s other bespoke applications into Snowflake and further integrate with third-party systems such as Jira and Zoho Desk.

“dbt Cloud will become the key technology in stitching everything together so that our backend infrastructure is in one place and contributes to one central source of truth,” Theo explained. The output can then be fed back into analytics work for a more complete picture of each mine site’s operations—while simultaneously reducing the infrastructure costs of duplicating and maintaining data pipelines across systems.

“As we onboard more clients, the importance of our analytics data store keeps growing. I don’t know what new use cases we’ll see, but I do know that I’m 100% confident my team has the data workflow they need to uncover impactful, cost-saving insights for every client,” said Theo.