dbt Launch Showcase 2025 recap

Today, at the annual dbt Launch Showcase, we released a ton of exciting new features for dbt, all purpose-built to help our customers and users tackle the next wave of analytics and AI. It was without a doubt, the biggest launch event in our company’s history. In a jam-packed 90 minutes, we had an executive keynote, new product announcements, and in-depth demos that all centered around the theme of how dbt is empowering enterprises for the next era of analytics. Let’s break that down 👇

Empowering the enterprise for the next era of analytics

The next era is without a doubt driven by and in service of AI. We showcased the dbt MCP server and other dbt Copilot-enhanced features to help our users connect their AI systems to governed, trusted data.

And in this new AI era, data has to be a team sport. That means that data tooling needs to extend beyond data developers to include the downstream analysts who need more governed inroads to participate in the data workflow. And also to include the organizational stakeholders who need to ensure that data initiatives stay within budget and that they’re future-proofing their investments.

The innovations we shared are designed to empower our users—whether data developers, data analysts, or their organizational leaders—to standardize on dbt to help their organizations win with data in this new era.

- For developers: The new dbt Fusion engine and VS Code extension (both in public beta) deliver productivity and developer experience improvements that make it turnkey (and delightful!) to ship high-quality data at speed and scale

- For analysts: A new suite of platform features designed specifically for analysts—dbt Canvas (GA), dbt Insights (Preview), an expanded dbt Catalog (Preview for Snowflake assets), and a new flexible seat type—make it easy for data analysts to bring their business knowledge to bear and participate in governed, self-service data development in a dbt-tonic way

- For organizations: New cost optimization and platform flexibility features help budget owners and organizational leaders optimize every dollar spent on data and standardize on a platform that supports flexible navigation of cross-platform architectures

A new, simplified naming philosophy

This is such a massive launch that not only did we have to start from scratch on the technology behind dbt, we had to re-think the way we talk about it. In the past, we primarily built two separate but interlocking products: dbt Core and dbt Cloud. But with the launch of the dbt Fusion engine, we’ve rearchitected significant parts of the technology to work more closely together. As a result, we don’t really have two products anymore…we just have one. And it’s called dbt. You can read a lot more about our new naming and the ethos behind it here.

The dbt Fusion engine is the future of dbt and is available to you whether you’re a managed dbt customer or not: you can adopt Fusion via the freely available and permissively licensed source code and binary, or via the dbt platform. See the full post here on how we've licensed Fusion.

So, back to the naming: you can choose to just use the open source / source available components (the engines), or you can use paid features on top. But either way, the experience is tightly integrated. It’s all just dbt.

Let’s dive in to the specifics of our announcements.

AI needs a strong data foundation

dbt MCP Server

As your AI stack evolves, your AI systems need structured context that stays consistent. And that context needs to be centralized, governed, and available across every agent, tool, and workflow. It can’t live in fragments or tribal knowledge; it has to be defined in code.

MCP is quickly emerging as the standard for providing context to LLMs and AI agents, allowing them to function at a high level in real world, operational scenarios. With the dbt MCP Server (in beta and publicly available in an open source repo), you can expose your dbt project’s trusted models, metrics, tests, and lineage to AI systems, giving agents and LLMs a structured foundation of governed context so you can trust the queries, reasoning, and actions that they inform.

By integrating with the dbt MCP server, AI agents and LLMs can now discover trusted data models and metrics, query the semantic layer using generated SQL, and safely execute dbt projects, enabling structured, governed AI workflows.

Together, these dbt MCP server tools make dbt not just the structured context layer, but also:

- The governed control layer between your data workflows and your AI.

- The bridge between the governed warehouse and your LLMs, agents, and AI-powered tools.

- The standard for creating governed, trustworthy datasets so structure is defined once and reused across every AI workflow.

With more tools on the way, dbt is becoming the connective layer powering enterprise AI systems with confidence. Download the repo here, and learn more about how dbt can help with your AI initiatives here.

Empowering developers with the new Fusion engine and VS Code Extension

New era, new engine

We announced that the new dbt Fusion engine is in public beta for eligible Snowflake projects. We also released the official dbt VS Code Extension in public beta.

Fusion represents a huge leap forward for teams building with dbt. Fusion isn’t just another feature. It’s the foundation for a new era of analytics engineering, ushering in a whole new dbt experience that is faster, more intelligent, and more cost-efficient than ever before.

By standardizing on Fusion, teams can unlock:

- Lightning-fast performance, with parse times up to 30x faster than dbt Core.

- Native SQL comprehension, unlocking capabilities like real-time validation of your code—without the need to query your warehouse.

- State-awareness, giving dbt a rich understanding of the state of your dbt project and what’s been materialized in your warehouse. This allows dbt to intelligently avoid unnecessary builds, resulting in higher velocity pipelines and substantial cost savings. Early customers are already seeing ~10% reductions in warehouse spend.

“We anticipate the dbt Fusion engine will mark a new chapter for our data team — one where speed and efficiency are baked into every part of the analytics lifecycle.”

Matt Karan Senior Data Engineer @Obie Insurance

A purpose-built VS Code extension, powered by the Fusion engine

The official dbt VS Code Extension is the only way to tap into the full power of the Fusion engine when developing locally. With it, users get a hyper-responsive development experience bolstered by capabilities like:

- IntelliSense for smart autocompletion for models, columns, macros, and functions.

- Automatic refactoring to update references across your entire project instantly when a model or column is re-named.

- Go-to-definition and inline CTE previews to aid in navigating large projects and rapid debugging.

Note that to use the VS Code Extension, your project must be running on the Fusion engine. The extension does not support dbt Core, as the key developer experience enhancements it’s designed to enable rely on the technological foundations of the new engine. You can learn more here.

Empowering analysts with dbt Canvas, dbt Insights, and an expanded dbt Catalog

We’re launching a new suite of capabilities to make it easier for analysts to contribute directly to transformation workflows in dbt without sacrificing the governance and quality teams data teams require: dbt Canvas, dbt Insights, and an expanded dbt Catalog.

Build and edit models in a visual development environment with dbt Canvas

dbt Canvas is a AI-powered visual editing experience that makes it easy for analysts to build and edit dbt models. With Canvas, analysts can work out of a drag-and-drop interface to discover trusted sources and models, apply transformations like joins, filters, and aggregations, and preview outputs step-by-step as they go. Every transformation is automatically compiled into SQL that fits seamlessly into your existing dbt project.

Canvas includes always-on data profiling, context-aware AI assistance with dbt Copilot, and intuitive Git-based version control. Analysts can commit their work and open PRs without leaving the Canvas interface, making it simple to contribute while maintaining quality and oversight. Whether defining a new KPI or iterating on an existing model, analysts can work with confidence and speed inside a fully governed workflow. dbt Canvas is now GA for Enterprise customers. For more details, check out the dedicated Canvas launch blog post.

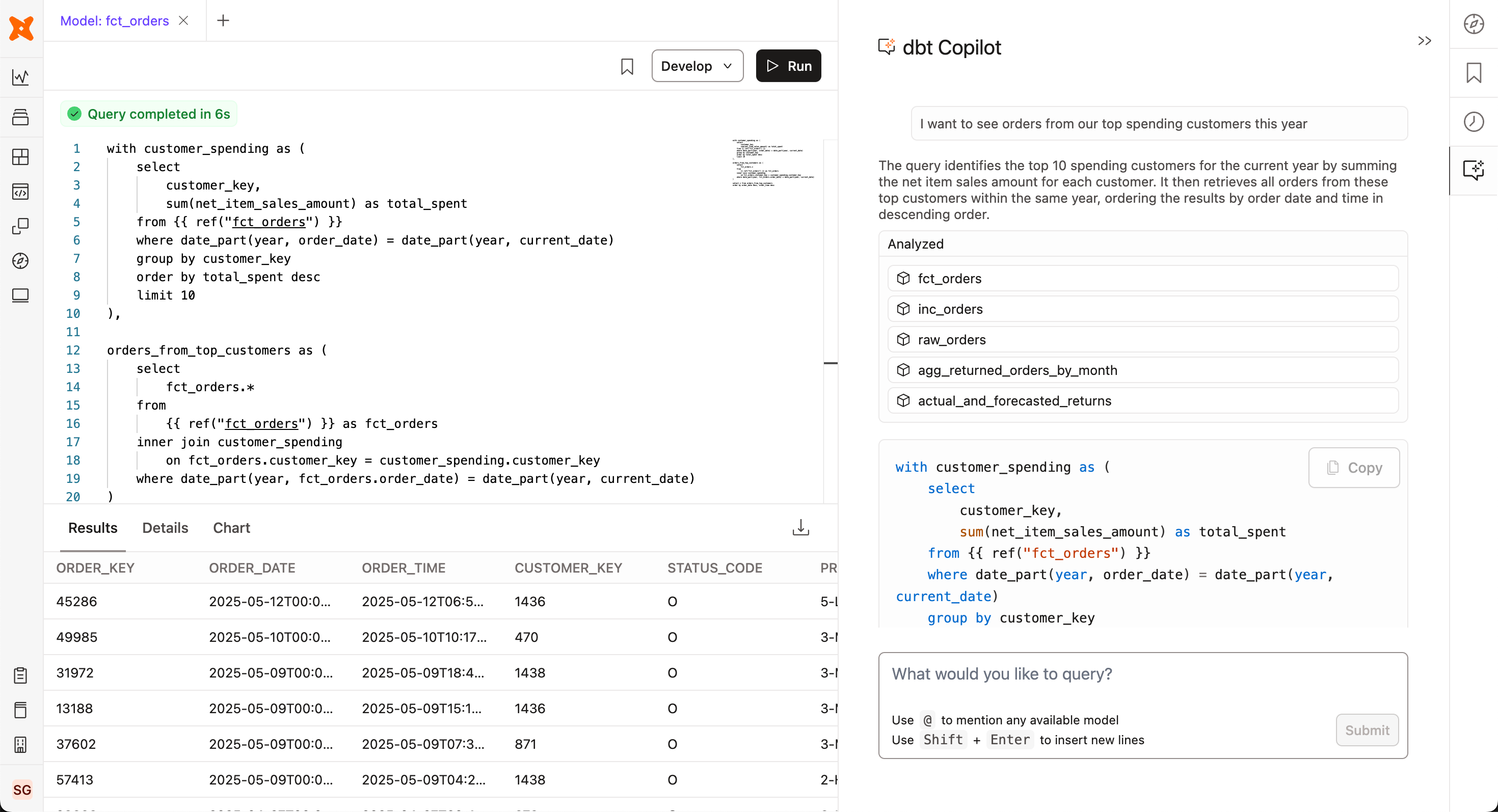

Get ad-hoc insights, fast with dbt Insights

dbt Insights is a new interface for fast, governed data exploration that combines metadata, documentation, AI-assistance, and powerful querying capabilities into one unified experience. Insights supports both SQL and natural language queries, with built-in AI assistance from dbt Copilot, query history, and instant visualizations.

Users can quickly validate ideas, explore trends, and iterate on queries in a context-rich environment that surfaces metadata, lineage, and trust signals from across the dbt platform. When ready, analysts can turn their exploratory work into models in dbt Canvas or Studio all within the same workflow.

With tight integration across dbt, Insights helps teams collaborate, explore, and build with confidence. dbt Insights is in Preview for Enterprise customers.

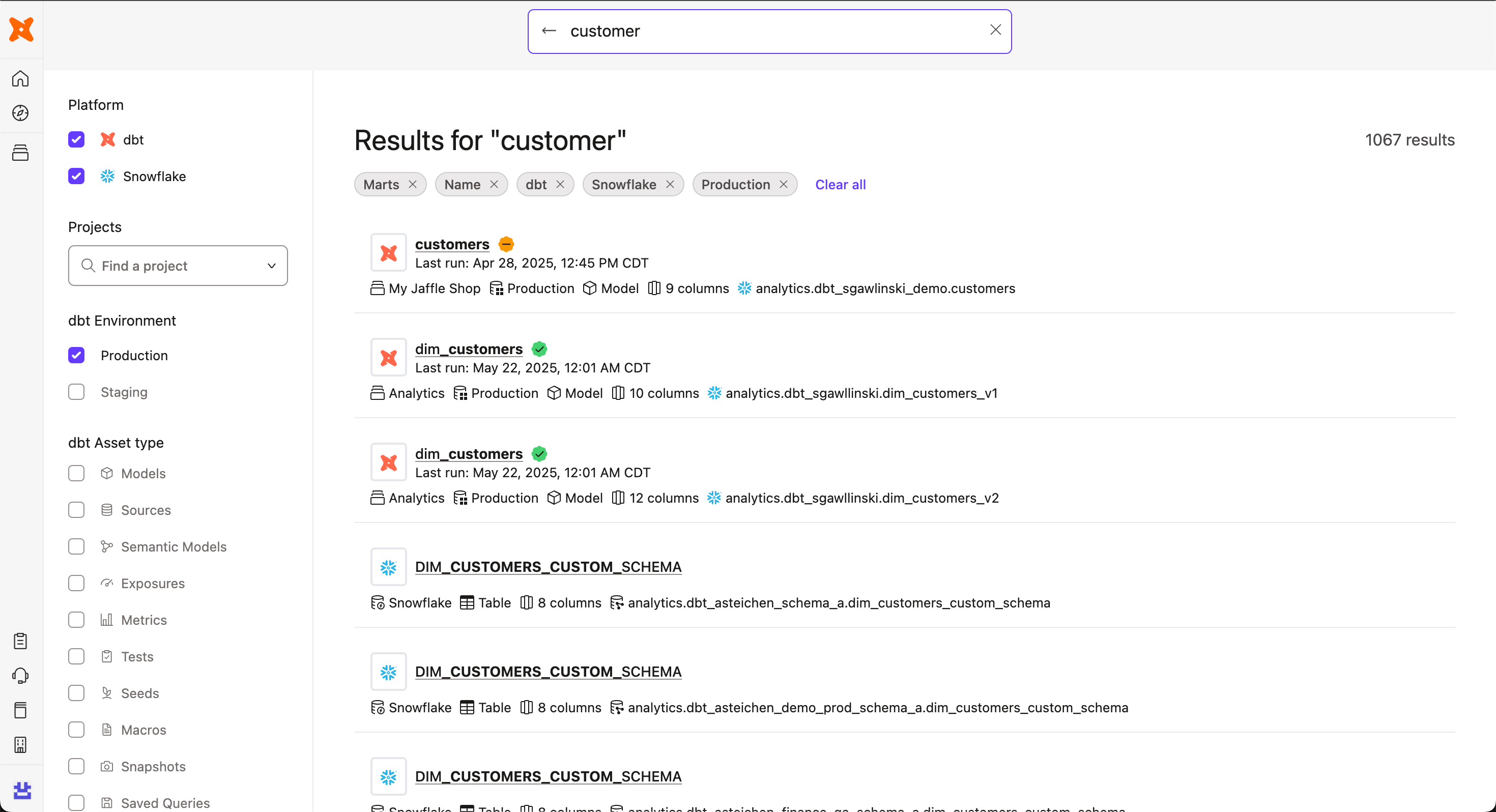

Broaden data discovery with an expanded dbt Catalog

The dbt Catalog (formerly dbt Explorer) now goes beyond just dbt models, and allows users to search and explore Snowflake assets, like tables and views, directly within dbt. This unified view helps teams move faster and make more informed decisions, without switching tools or duplicating efforts. By centralizing discovery, the expanded Catalog streamlines collaboration, accelerates insight, and ensures governance in dbt workflows. The ability to find and visualize Snowflake assets in dbt Catalog is now in Preview, with integrations for other data platforms coming soon.

Empowering organizations with cost optimization and enterprise features

We're introducing new features to make dbt analytics workflows more cost-efficient, flexible, and enterprise-ready. These updates help teams reduce overhead, improve visibility, and work confidently across clouds and platforms.

Understand and optimize transformation spend with the cost management dashboard

Understanding data platform costs has been a persistent challenge for data leaders. Through monitoring, remediation, and state-aware orchestration, dbt provides comprehensive cost management capabilities. While monitoring gives visibility into warehouse spend and remediation helps fix existing inefficiencies, Fusion-powered state-aware orchestration prevents unnecessary costs by intelligently avoiding redundant builds and queries in the first place.

Rather than struggling with billing data or building custom pipelines, you can track spend directly in dbt. The cost management dashboard provides detailed breakdowns by account, project, environment, and model. This makes it simple to identify high-cost workloads, find optimization opportunities, and measure your ROI.

With the cost management dashboard, you can:

- Compare spend over time

- Drill into specific areas of platform spend

- Tie optimization work to real savings

- Track how Fusion-powered state-aware orchestration reduces warehouse compute costs

- Uncover waste with confidence

We’ve been using these features internally and have already discovered $10,000+ yearly savings with less than two hours of work. This is transformative for analytics teams looking to shift from reactive clean-up to proactive optimization.

“Before using the cost monitoring dashboard, we relied ona combination of Snowflake’s native usage reports and internal dashboards to track spend. It was difficult to tie costs back to specific dbt models or teams, and that made optimization slow and reactive. The cost management dashboard in dbt has helped us connect transformation costs directly to the work our team is doing. We’ve been able to pinpoint expensive models and make quick improvements. It’s changed the way we manage and prioritize our workloads.”

Ra Raman Director Data Engineering & Analytics @Zscaler

The cost management dashboard is currently available in Preview for Snowflake customers, with other platforms and functionality coming soon.

Simplify user management with SCIM (GA)

Manual user management doesn't scale. dbt now supports SCIM for automatic user provisioning and de-provisioning through identity providers like Okta and Entra ID. This keeps access in sync with your organization without burdening your admins. SCIM is generally available for Okta and in Preview for other providers.

Flexibility across catalogs and clouds

dbt is expanding support for hybrid and multi-cloud data architectures. You can now integrate with Iceberg, Unity, Polaris, and BigLake catalogs across Snowflake, Databricks, and BigQuery. This gives teams the freedom to choose the tools and platforms that best serve their needs without getting locked into a single vendor.

We've also launched a new hosting option on Google Cloud that enables co-location of your data and analytics layers. This includes support for Private Service Connect and GCP Marketplace procurement (coming soon), streamlining deployment in regulated or high-security environments. GCP hosting is available in North America today.

See you at Summits

We couldn’t be more energized for the future and to help our passionate and vibrant community continue to thrive with dbt. Fusion is the future of dbt and we can’t wait to see what you build on top of it. We’ll be on the road in San Francisco in June for both the Snowflake Summit and Databricks Summit and we look forward to seeing you there!

VS Code Extension

The free dbt VS Code extension is the best way to develop locally in dbt.