What is dbt Live?

The dbt Live: Expert Series consists of 60-minute interactive sessions where dbt Labs Solution Architects share practical advice on how to tackle common analytics engineering problems. In this session, Isabela Sobral demonstrated how to choose and configure a deployment workflow.

Event agendas are shaped by audience requests, so whether you’re new to dbt, or just want to sharpen your skills, we’d love to see you there! Register to take part in the next live session.

Session Recap: Designing a Deployment Workflow

The APAC-friendly edition of dbt Live features two experts from dbt Labs:

- Isabela Sobral, Solutions Architect

- Afzal Jasani, Solutions Architect

Isabela dived right into a topic that she’s talked to a number of dbt prospects and customers about: How to choose a deployment workflow and implement it.

You can watch the full replay here or read on for a summary of the session.

How do we choose a deployment workflow?

In order to deliver trusted analytics reliably, Isabela advises organizations should choose a deployment workflow that fits their needs for security and confidence. Organizations should keep their deployment workflow as simple as possible and:

- Think critically about the added value of each stage in deployment for your team and stakeholders

- Take advantage of dbt and git system native features to save you time, headaches, and tears

- Share your approach with the dbt Community - we all benefit in learning from each other’s experiences

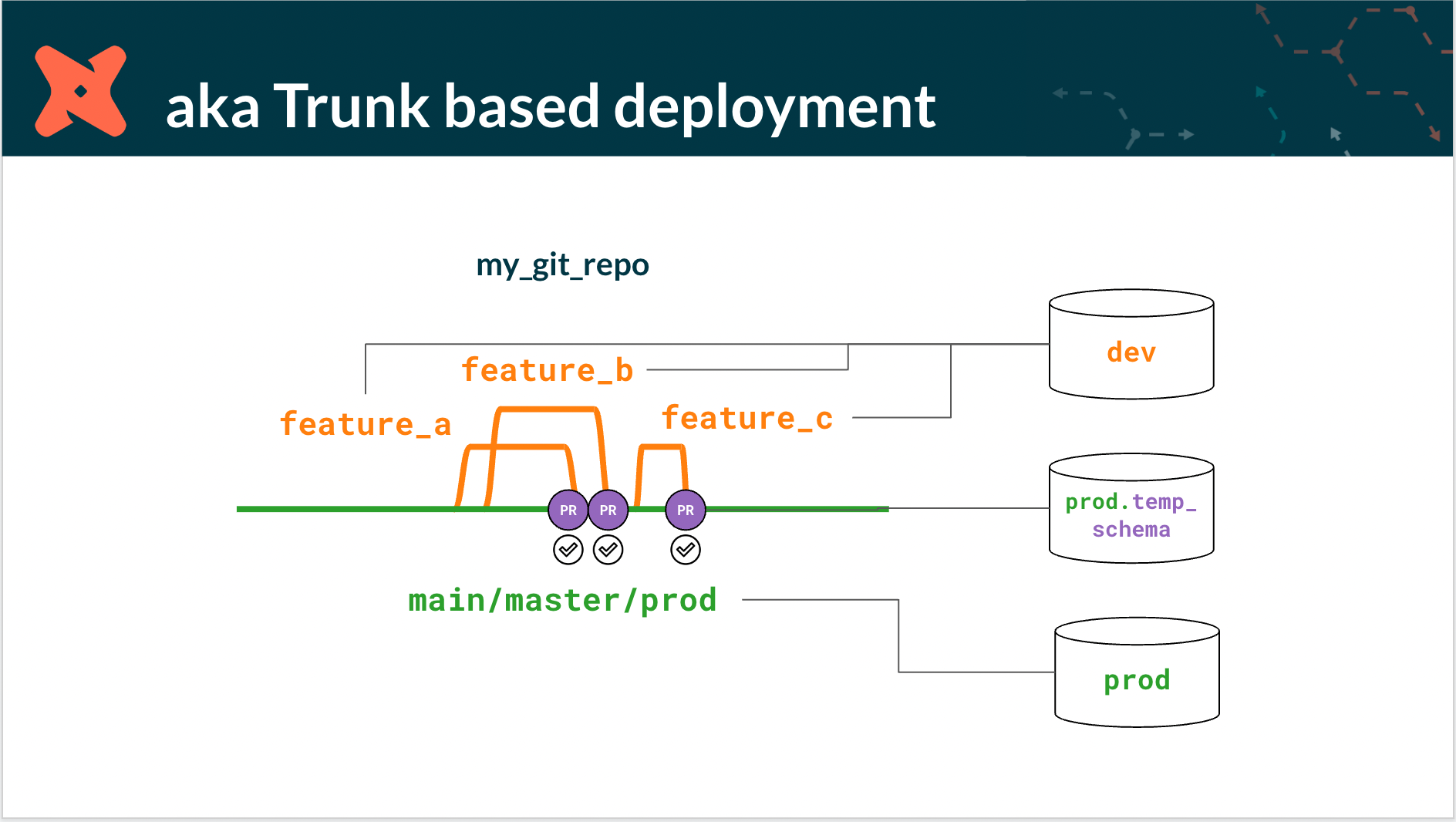

Using a Trunk Based Workflow

Most organizations start out with a simple trunk based deployment workflow shown below:

Within your data warehouse you’ll set up development and production databases. For your git repository, you’ll have a main branch that can be cloned by your developers where they will work on feature requests, such as enabling a new analysis, fixing a bug, or making a data correction.

To enable continuous integration to test new features each time a pull request is created you can configure this in dbt Cloud or use a solution like GitHub Actions with dbt Core. Continuous integration will allow your teams to define a set of tests each change should pass before approving a pull request to merge a feature branch into the main branch.

Isabela encouraged teams to commit early and often to avoid long lived branches that can increase the likelihood of merge conflicts, which negates the benefits of an automated continuous integration workflow.

Trunk based deployment can enable data teams to move quickly while ensuring quality control checks are satisfied before updating production data. But, some organizations need less speed and more confidence the changes will not disrupt decision making.

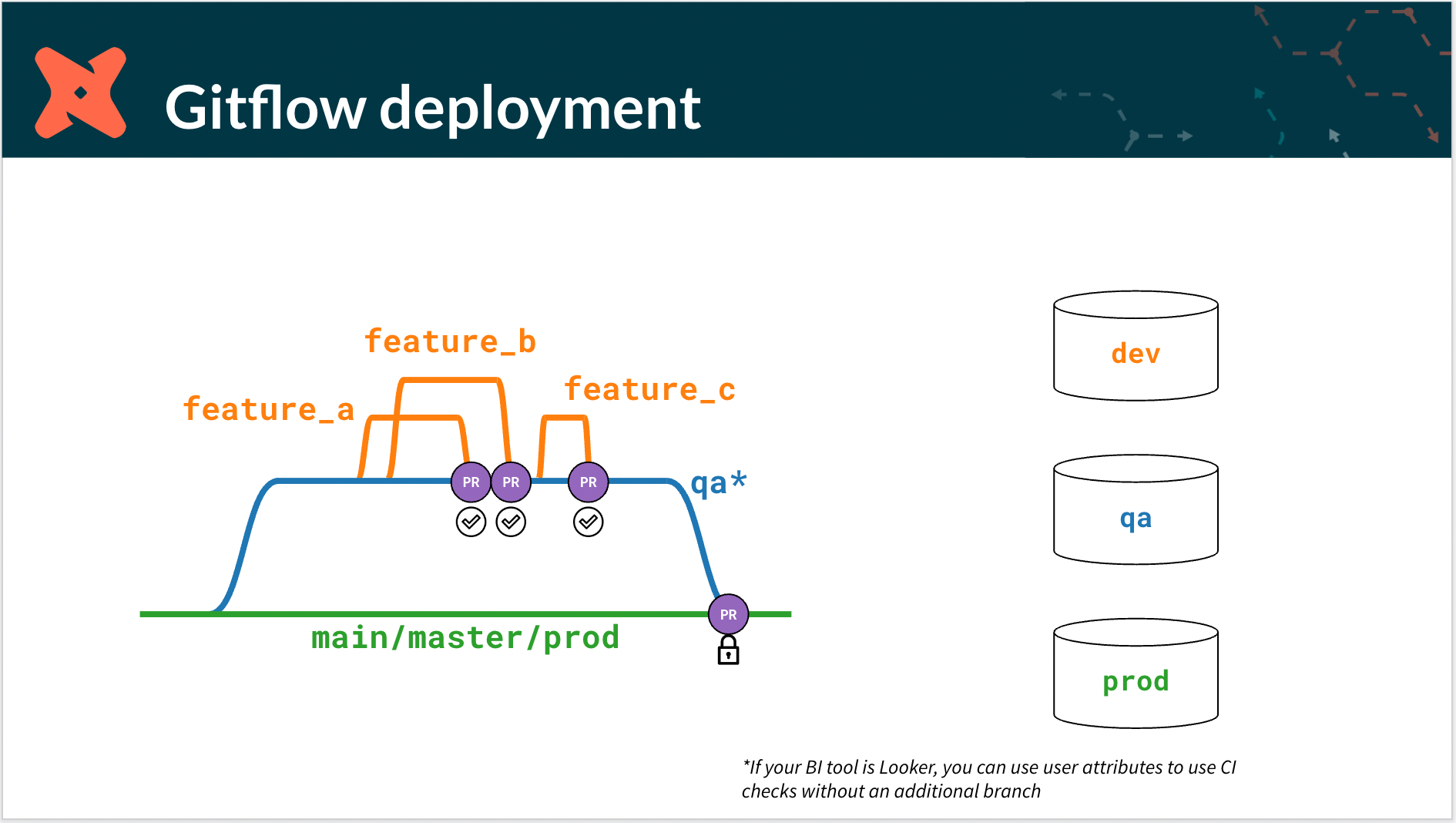

Using a Gitflow Workflow and Configuring dbt Cloud

A Gitflow deployment strategy can help organizations slow down feature releases to meet business requirements for a stable data platform and make communicating changes easier using weekly or monthly release schedules.

Here is an example of a Gitflow deployment strategy:

Developers working with a Gitflow deployment will check out feature branches of the qa branch to work on their feature. Once the feature is completed they will commit their changes to the qa branch as shown above, validate their model changes, and merge their feature branch with the qa branch.

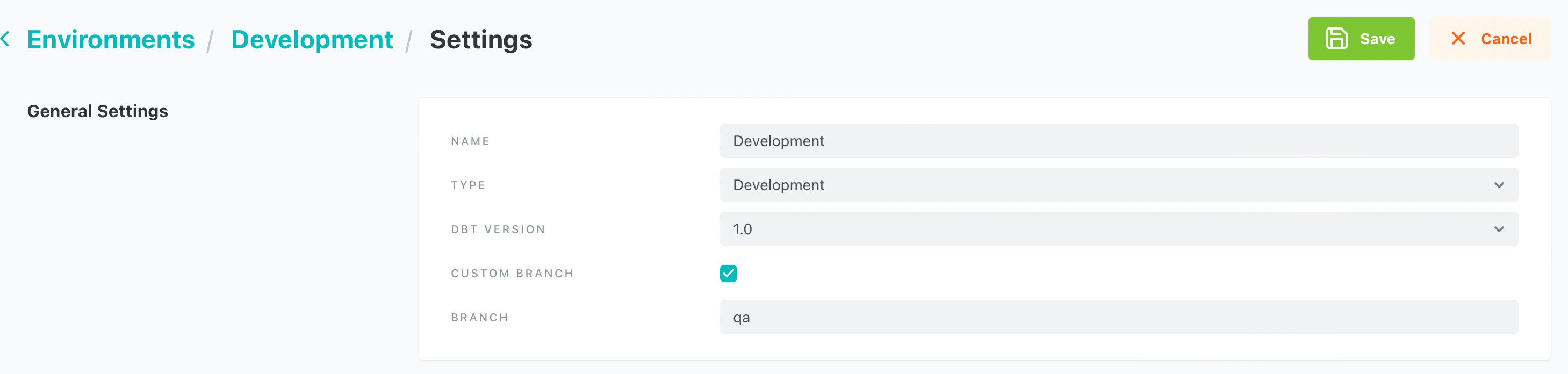

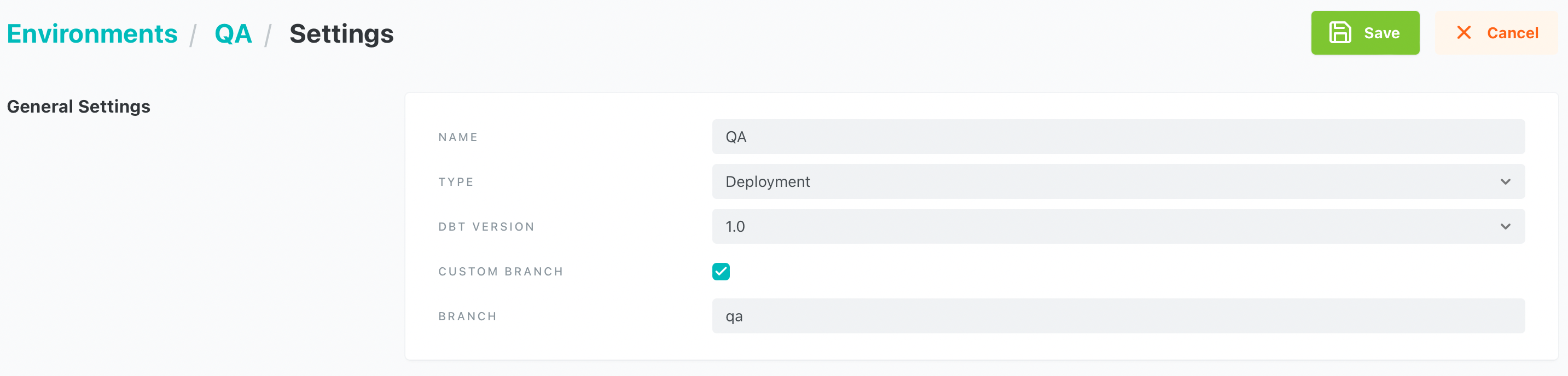

Using dbt Cloud, you can set where your development environment will check out branches from following these steps:

- As an Administrator

- Go to Environments in your dbt Cloud project

- Click your Development environment

- Click Settings

- Click Edit

- Check the box for Custom Branch

- Enter the branch name, in the example above this would be “qa”

- Click Save

This configuration will make it so developers cannot check out code from the main branch, just the qa branch.

On the database side, once a feature branch is merged with the release branch a QA database will be updated to reflect the current qa branch code. This creates a shared database where users can view the upcoming release changes before they are moved to production as an extra quality gate.

The QA database will need to be established within your cloud data warehouse as a copy of your production database. Then you’ll need to set up this environment in dbt Cloud. Make sure to set this environment up as a Deployment type and specify the Custom Branch that should be used to build the models for the pull request validation.

At the end of a development cycle or sprint, the Release Manager at your organization will open a pull request in git. This pull request will take the latest version of the qa branch and validate it against the main branch of your repository using the QA environment.

These two workflows comprise the majority of workflows Isabela sees in her work. There are other set ups, but she reminds us to remember to keep it simple and build a workflow that suits the needs of your organization.

You can check out Isabela’s full presentation below and the slides she talked through are here.

dbt Live: Expert Series APAC May Session

Participant Questions

Following Isabela’s presentation and configuration walkthrough, she and Afzal answered Community member questions received in advance of the session and that came up live from session attendees.

Here are some of the questions:

When is the time to switch to an incremental model?

Isabela explained that incremental models are a functionality in dbt that allow you to transform the data since the last time you ran it that can lead to performance optimizations. There is some complexity to maintaining and configuring incremental loads, so she recommends starting with a single table and then seeing how performance goes.

Afzal said, working with Google Analytics data is a good way to learn how to work with incremental models since it is static data and is perfect for using incremental data models.

What are the best practices for organizing a dbt project?

Isabela said we hear this question all the time and she and Afzal offered a lot of great resources on this topic, such as:

When is a multi-repo approach recommended?

Josh Devlin from the dbt Community asked this question live on the session and we were thrilled to have him join us!

Isabela recommend you ask your organization these questions to figure out your git repo approach:

- How important is transparency and collaboration across the business?

- Should all teams have access to other team’s code?

- Is there data that some teams should or should not see?

She went on to recommend starting with a mono-repo because it works seamlessly and provides a full picture of the data model.

But, there are situations when a company wants separate repos, such as when they acquire another company, they may want to keep models separate or they want to prevent folks from printing logs during production runs to not see production data.

If you do go the multi-repo route and want to see lineage you will have to import the other projects to see a full lineage view. If you want to make projects dependent on each other, you have to establish a practice of importing dependent packages to keep lineage up to date.

You can also check out this post from dbt Labs Analytics Engineer, Amy that talks about different repo set ups and considerations.

How can we connect Airflow with dbt?

Great news! We are going to have a dbt Live session with dbt Labs Solution Architect, Sung Won Chung on June 10 at 1 PM ET, register here to join the session live.

Before the session, you can check out this Github repository for all the details on this integration. The integration with Airflow allows dbt cloud jobs to exist as tasks in the Airflow dag. You can click into a task in Airflow and then be linked right over to dbt Cloud to view the job.

Wrapping Up

Thank you all for joining our dbt Live: Expert Series with Isabela and Afzal!

Please join the dbt Community Slack and the #events-dbt-live-expert-series channel to see more question responses and to ask your questions for upcoming sessions.

Want to see more of these sessions? You’re in luck as we have more of them in store. Go register for future dbt Live: Expert Series sessions with more members of the dbt Labs Solution Architects team. And if you’re as excited as we are for Coalesce 2022, please go here to learn more and to register to attend.

Until next time, keep on sharing your questions and thoughts on this session in the dbt Community Slack!

Published on: May 27, 2022

2025 dbt Launch Showcase

Catch our Showcase launch replay to hear from our executives and product leaders about the latest features landing in dbt.

Set your organization up for success. Read the business case guide to accelerate time to value with dbt.