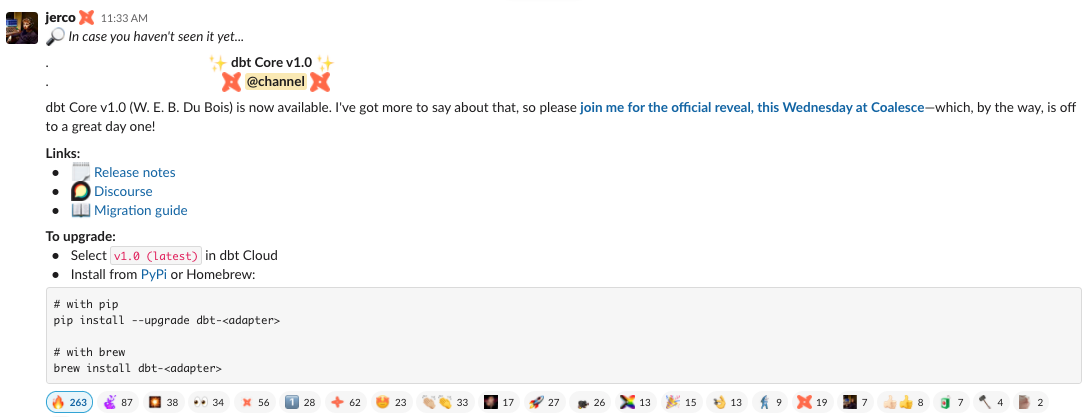

dbt Core v1.0 is here: 200+ contributors, 5,000 commits, 100x faster parsing speed

on Jun 03, 2024

Since the first line of code was committed to dbt Core in 2016, we (dbt Labs and a broader community of contributors) have worked to create a way to make analytics work more collaborative and reliable.

During this time we've reserved the right to make significant changes, to keep up with ever-evolving market needs. After 5,000 commits from 200+ contributors, we're ready to "lock in" what we believe will be a foundational component of the modern data stack. dbt Core v1.0 is a long-awaited milestone that signifies achieving a level of stability and reliability we believe is required to confidently build on this solution for years to come. With this version, users will benefit from:

- Improved performance to ensure quick development cycles in dbt projects of all sizes

- Increased stability, so you can feel confident building projects and tooling on top of dbt.

- Opinionated workflows, such as the dbt build command for multi-resource runs

- Intuitive interfaces making it easier to configure projects and deployments

- Easier upgrades to newer versions, so you're always getting the latest & greatest that dbt has to offer

Road to v1.0: What we learned, and shipped along the way

"Make easy things easy, and hard things possible." This principle has guided product strategy at dbt Labs for the last 5 years, helping us to focus on building experiences that are intuitive for getting started, and extensible for the long run.

2016

Weekly Active Projects: 3 | dbt Slack Community: 13

In 2016 we launched the complete analytics engineering toolkit for Postgres and Redshift. Now data teams could write modular transformations, add custom tests, and create incremental and ephemeral models.

2017

Weekly Active Projects: 105 | dbt Slack Community: 251

In 2017 we focused on workflow efficiencies by introducing macros, materializations, and custom schemas and packages. We also expanded our warehouse partnerships with added support for Snowflake and BigQuery.

2018

Weekly Active Projects: 324 | dbt Slack Community: 1,101

In 2018 we focused on the data consumer with dbt docs -- making data definitions and lineage more accessible and useful for stakeholders everywhere.

2019

Weekly Active Projects: 1,124 | dbt Slack Community: 3,457

In 2019 we launched dbt Cloud to abstract away the setup and maintenance of dbt Core, making analytics engineering more accessible and reliable. We also replaced "archives" with snapshots, giving analysts ever-better tools to capture and model slowly changing dimensions. Finally, and perhaps most importantly to some, we launched the IDE (integrated development environment):

2020

Weekly Active Projects: 3,185 | dbt Slack Community: 8,813

In 2020 we doubled down on speed and flexibility. We introduced complex node selection, Slim CI, and exposures. We sped up cataloging and caching on Snowflake; We added first-class support for Databricks SQL. We saw Fivetran and Census launch major integrations with dbt.

2021

Weekly Active Projects: 8,000 | dbt Slack Community: 22,051

What's inside: Unboxing v1.0

Arriving at v1.0 is a significant milestone in maturity for dbt, but reliability isn't the only value-add. This release is the culmination of a number of features designed to help every data team operate with maximum speed and efficiency:

dbt Build: Why opinionated execution matters

One of the most exciting features of dbt Core in 2021 is dbt build. This single opinionated command executes everything from your project, in DAG order, following best practices. Run models, test tests, snapshot snapshots and seed seeds while prioritizing quality and resiliency. Reducing several steps to a single command not only saves time, it reduces opportunity for error and inconsistencies as you scale.

100x faster parsing: How development cycle speed impacts business outcomes

There's much to be said for "flow state" in the world of software and analytics development. Gaps in that timeline -- or more specifically -- "software-forced timeouts" deteriorate productivity.

As Joel Spolsky put it in his piece on software development best practices: "If compiling takes even 15 seconds, programmers will get bored while the compiler runs and switch over to reading The Onion, which will suck them in and kill hours of productivity." Of course, we believe analytics engineers are just as likely to spend that time on other meaningful tasks, or to find clever speedier workarounds---but we don't want involuntary context-switching, workarounds, or extended coffee breaks. This isn't a great user experience, and it saps developer patience needed for other important practices, such as testing and documentation.

Our goal all year long has been to secure readiness times of <5 seconds in development, for projects of all sizes. To make that concrete: On January 1, a project with thousands of models could take eight minutes to start up, every single time an analyst changed a single line of SQL. In dbt v1.0, for the same project, that process takes 5 seconds. That's 100x faster project parsing to help users stay plugged into a single task.

Building on dbt: How stability drives innovation

With dbt v1.0, dbt is officially out of "beta." Users and partners can feel confident building key data infrastructure on top of dbt, knowing that dbt Labs will not make breaking changes to documented interfaces.

Versioning metadata artifacts is a great example of why this matters. Since their introduction in 2018 to power the docs site, artifacts produced by every dbt Core invocation (such as manifest.json and run_results.json) have been storehouses of valuable information about dbt projects and executions. Open source community members used these artifacts as inputs to helper tooling (code auto-complete) and project health checks (calculating test and documentation coverage). Prior to 2021, though, the contents of these artifacts could change, without warning, in every version of dbt.

Since v0.19, released in January, we have versioned and documented metadata artifacts, and limited schema changes to minor versions of dbt Core. After v1.0, we'll be doing even more work to minimize backwards-incompatible changes, and communicate them clearly when they do happen --- meaning, for those who build batch metadata integrations, there's no cause for unplanned work every 3 months. The v1.0 release goes one step further, by including a full rework of dbt events and structured logging, including a versioned contract for log contents. This lays the groundwork for real-time integrations solutions in years to come.

Who's building on top of dbt Core today?

There are already dozens of databases and data warehouses, thanks to vendors and community members who have built adapter plugins---including Databricks, Materialize, Starburst, Rockset, and Firebolt. Then there are Lightdash, Metriql, and Topcoat, great examples of boundary-pushing solutions built via dbt project code. Of course, we can't forget those who've built integrations with the dbt metadata API---Hex, Mode, and Continual. We're tremendously excited about the early adopters who have paved the way; for the stable foundation that dbt Core v1.0 lays; and for the many integrations still to come.

Define once: configurations, extensibility, and a single source of truth

The flexibility and extensibility of dbt Core has long been one of its defining features. It just works, batteries included, out of the box --- and when you want to replace those built-in batteries with your own supercharged versions, you can. This is a sign of growth, in your project and in your deployment. Any model can be reconfigured, whether one by one or dozens at a time. Any macro, serving any purpose, can be reimplemented in your project to meet your needs.

We also recognize that so much custom code can add bloat to projects. We've made changes this year to help: More intuitive ways to configure models in all the ways and places you'd expect. Better support for environment variables and global runtime configs that make projects portable to all the places they need to run. Advanced capabilities with macro dispatch that enable large organizations to customize built-in or database-specific behavior for all in-house projects, saving thousands of lines of duplicated code.

At the same time, as data footprints grow, the need for absolute consistency has become only more critical for the key metrics that define a business. Annual recurring revenue must mean the same thing, in every tool, report, and discussion that references it --- and so all must be using the same definition of ARR. dbt Core v1.0 includes a start: a single place to define an organization's metrics, in dbt code, such that those same definitions can be used (and reused) across a complex data system. The full value of this work will only be realized with help from collaborators like Mode, Hex, and Fivetran---and forthcoming features in dbt Labs' commercial offering---that enable users to interface with metrics from anywhere.

We're at the start of another exciting journey, of which the release of dbt Core v1.0 is only the beginning.

Published on: Dec 08, 2021

2025 dbt Launch Showcase

Catch our Showcase launch replay to hear from our executives and product leaders about the latest features landing in dbt.

Set your organization up for success. Read the business case guide to accelerate time to value with dbt.