dbt + Databricks: Bringing analytics engineering to the lakehouse

last updated on Oct 15, 2024

Our goal at Fishtown is to provide analysts and analytics engineers with mature, reliable tooling to do their highest-leverage work. To that end, we're excited to announce brand-new support for the dbt-spark plugin in dbt Cloud. I'll share why I believe this integration may permanently change how analysts, working with datasets small and large, will view the role and impact of Spark---and the centrality of analytics engineering---within their own organization.

Why did we do this?

We hold fast to the belief that business logic should be expressed in SQL. It's the lingua franca of data, the language that is most accessible to the greatest number of people at an organization. The rise of modern cloud data platforms has made it possible to deploy this principle at scale like never before. In particular, the data lakehouse promises to bring all the power and extensibility of data lakes into the intuitive, accessible framework of data warehouses.

In my time as an analyst, I knew that it was possible to write SQL all sorts of ways: a local Postgres instance, a faraway Redshift cluster, a SQLite file, or while dreaming of select-stars at night. But, like many analysts, I had always believed that Spark was a tool for data engineers, not analysts.

I now think that's plain wrong. We should recognize today that there's no such thing as a non-technical data analyst, and there is such a thing as an analytics engineer. Databricks, founded by the original creators of Apache Spark™, has provided the most compelling evidence yet in the form of a new platform interface targeted to SQL-savvy analysts with a tangible consequence: for the first time ever, SQL writers can be first-class Spark users.

Our belief is that some of the most important work happens between the traditional silos of data engineers and data analysts---the connective tissues of defining, testing, and documenting foundational data models. Databricks now offers a compelling and accessible interface for each of those two traditional personas. I believe that the real Databricks power users will be those who can make the most of both---and they'll do it with dbt.

Previous challenges for analysts using Spark

Using Spark makes me feel "closer to the metal." I can move, transform, and reshape tremendous amounts of data---be they tabular, semi-structured, or unstructured---by means of powerful and performant functions for reading JSON, collecting and exploding arrays, regex-parsing strings, the works.

Yet, as an analyst trying to use Spark for the very first time, based on my experience with other database technologies, I was struck by a few differences:

- I needed to provision and connect to a specific interactive cluster, and read query logs by accessing that cluster's Spark UI application.

- Metadata was stored and accessed differently.

- The SparkSQL dialect feels Hive-like, rather than using PostgreSQL-style syntax.

A few years ago, I had to educate myself on these differences, and translate my previous experience writing SQL for analytics. Today, that's changed: I can get an environment that's familiar right off the bat, powered by the combination of Delta Lake, the new Databricks SQL workspace, and the dbt-spark plugin.

Once stood up, a SQL-only DAG of data transformations, defined and run by dbt, is even richer in possibility because of all the things that are easy on Spark's: staging files in external storage; reading from streaming sources; feeding dbt models directly into statistical inference, regressions, and ML workflows.

With Databricks and dbt, Spark can be for everyone

Architecture tuned for analytics. As a hosted platform, Databricks has always made it easy to skip the heavy construction or superglue code of AWS EMR or Azure HDInsight. In particular, using the new Databricks SQL Workspace on top of Delta Lake, analysts can connect to a straightforward endpoint via a new-and-improved ODBC or JDBC driver. This is a well-known construct for analysts who are used to setting up their favorite SQL runner, and the connection parameters are familiar to anyone who has hooked up their database to a BI tool.

Spinning up a new SQL endpoint in the Databricks SQL Workspace is as simple as picking a name and a size, in the vein of Small, Medium, or Large. I can set the endpoint to auto-suspend after an interval of inactivity; it's ready to spin up again as needed, so dbt commands always find their mark after a minute or so. Plus, each endpoint offers a unified and filterable query history that will be familiar to any cloud warehouse user, preferable to several clicks through a running cluster's Spark Application UI.

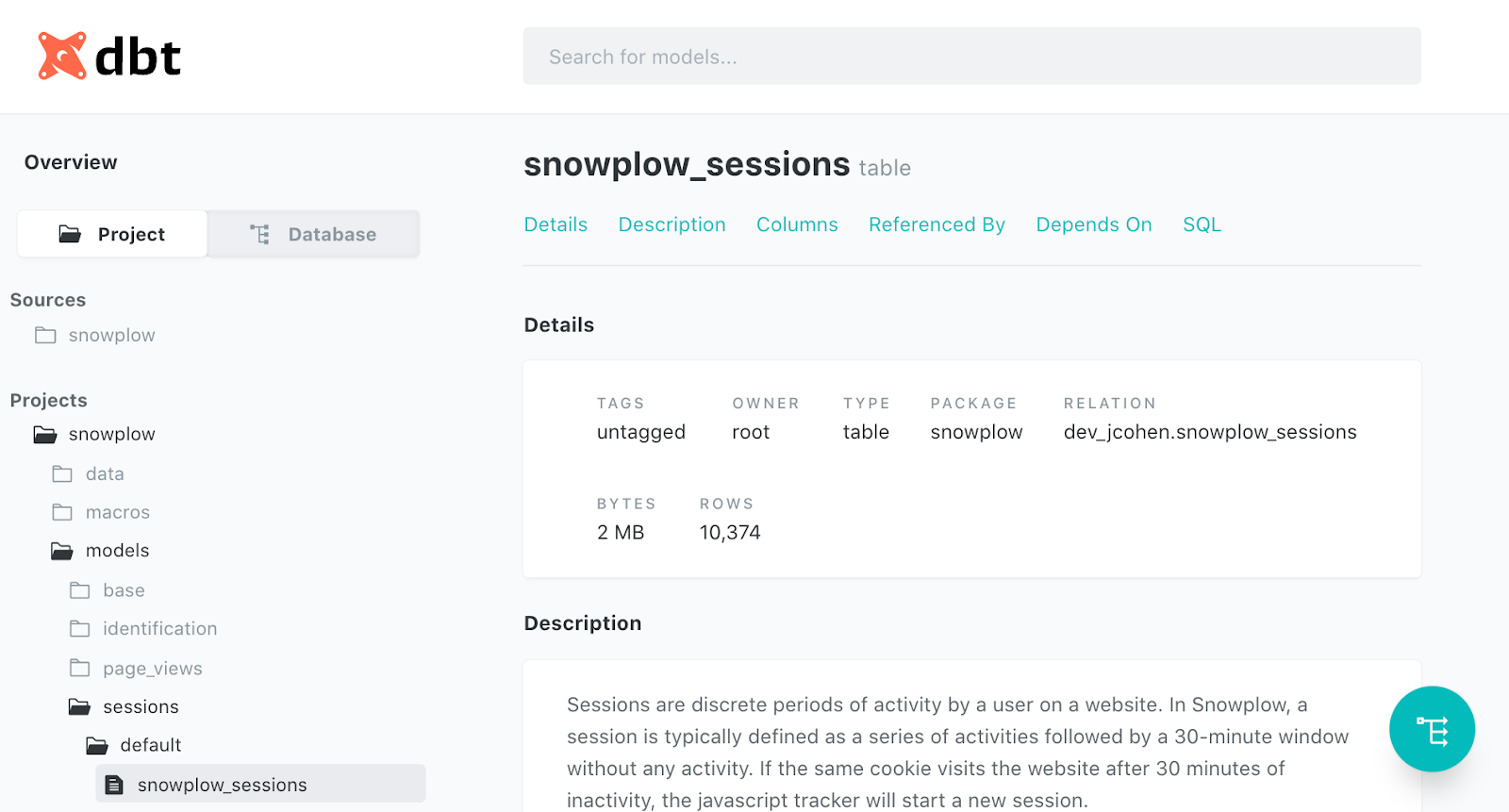

Metadata made accessible. Spark has show and describe commands; while they feel different from querying pg_ or information_schema tables, they return treasure troves of information about all the objects in a data lake. dbt leverages these commands to power its runtime cache and populate the documentation site. That site is generated and hosted right within dbt Cloud, accessible to anyone in your organization:

Open source SQL. Some common SQL functionality wasn't previously available for dbt-spark users. Now, dbt supports an under-the-hood mechanism (dispatch) to shim support for macros defined in other packages. This is what feature parity looks like for datediff on SparkSQL. An end user can install dbt_utils and spark_utils, call dbt_utils.datediff() as they would on any other database, and give it no more thought.

Total feature parity. The Delta file format and Databricks runtime offer a few significant quality-of-life features on top of Parquet + Apache Spark. To my mind, the biggest one is merge. By default, the dbt-spark plugin extends the common abstraction of incremental models via insert overwrite, which operates at the partition level only. That has appeal for very large, partition-friendly datasets---immutable event streams chunked daily, for instance---but that's far from being every table. With the flip of a config switch---file_format: delta and incremental_strategy: merge---incremental models will instead perform atomic upserts on a unique identifier. This also enables support for dbt snapshots, which are unavailable on Apache Spark.

Looking ahead

This is the first of many: we're building a foundation that we hope to scale to all open-source dbt plugins. If you're just generally interested in staying up to date on this topic, or chatting with peers interested in the same, the #db-spark channel in the dbt Slack community is the place for you. You can also reach out to me directly there, @jerco. The Databricks + dbt Cloud integration is currently in closed beta; if you're interested in trying it out, please drop us a note!

VS Code Extension

The free dbt VS Code extension is the best way to develop locally in dbt.