Helping companies secure their IP

Founded in 2001, Code42 helps employers secure their data while still harvesting a collaborative environment. The organization has developed technology that enables companies to identify leaks, source code, or IP theft by their workforce. Based in Minneapolis, they employ over 350 people.

Data as the compass for decision-making

Data has always been at the forefront of how Code42 makes decisions:

“How do we make good decisions based on data? That’s what we’re looking for,” said Josh Carlson, Director of Analytics at Code42. “ We have a small team of six, so we’re critical about where we allocate time. Our job is to build data models that help the whole business.”

Early analytics at Code42

Starting with Salesforce data on Tableau

The analytics team at Code42 got started seven years ago with a sales use case. Their goal: understand sales performance and uncover new revenue-generating opportunities.

“Our first request was to build sales dashboards, so our database consisted of just Salesforce data,” said Josh. “We started out with Tableau with a bunch of custom SQL.”

Although the nimble set-up provided analytical value in the short term, it quickly hit issues.

First, SQL code needed to be repeated multiple times, leading to errors and discrepancies on how metrics were calculated.

“It got overwhelming quickly,” explained Josh. “It was really messy. We ended up with the classic ‘Why does this report say this number and this other report say another number?’ We’d have one different character in a query and it’d take hours to find.”

Second, maintaining the Salesforce API integration was a complex activity. At one point, one of the six members of Code42’s data team worked exclusively on keeping the integration running.

Moving to Snowflake with Fivetran

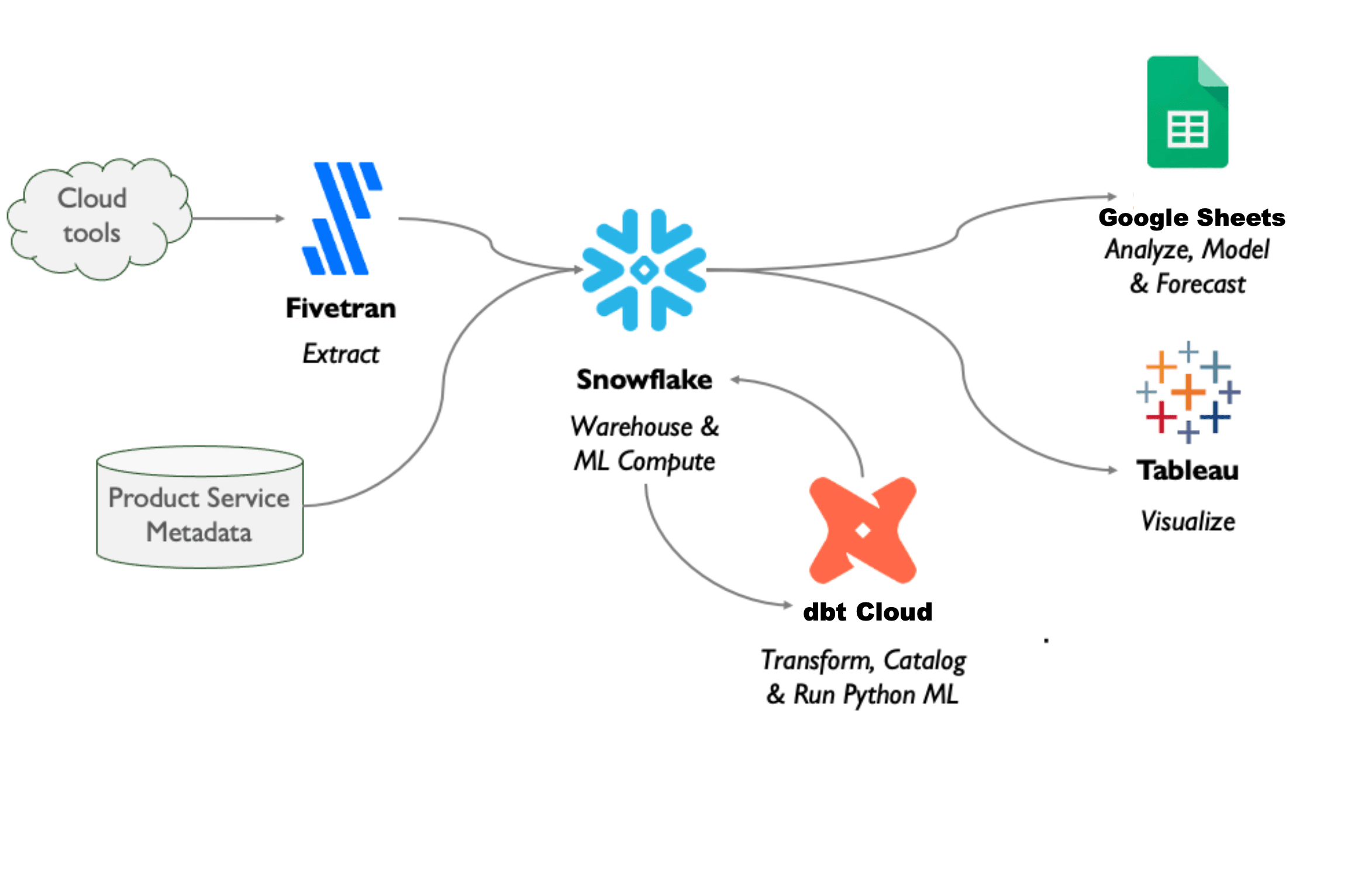

The next step for Code42 was to upgrade their Postgres internal database to a cloud warehouse (Snowflake) and to purchase an ingestion tool (Fivetran).

With the new tools, importing Salesforce data got a lot simpler, but the issues with querying and modeling the data weren’t resolved.

“With less time spent on the API integration, we could develop more views. We’d create them in Snowflake and query in Tableau,” explained Josh. “But it was getting harder to chain these things together smartly.”

Enter dbt: bringing governance and interconnectivity to SQL models

The last piece brought into Code42’s data stack was dbt. The company started with dbt Core to interconnect the different Snowflake views. This enabled Code42’s data team to reuse and piece together different SQL models.

“We could build one SQL query and easily reference it in another query. You didn’t have to repeat yourself,” emphasized Josh.

Implementing a collaborative data development workflow with dbt Core

Enabling ROI-driven, cross-team collaboration

Code42’s data team supplies data and insights to the whole organization. With the number of net-new, custom models greatly reduced, they built a source of truth for the business. Different teams could look at the same metrics and collectively work to reach their revenue goals.

“Our marketing team and sales teams now communicate and collaborate because they share common sales funnel metrics,” said Josh. “Before, we focused just on sales for dashboarding. But now that we’ve expanded to the full sales funnel, teams work together from lead generation to signed contract.”

“Our data has also created a partnership between our product, operations, and finance teams to help manage costs and improve margins. That's some of our most impactful work since it's directly tied to profit."

Outgrowing dbt Core

Difficulties scaling data infrastructure without CI/CD

The successful adoption of Code42’s data infrastructure dramatically increased the complexity of data maintenance. As the team built new queries on top of existing views, their models had more and more dependencies.

“dbt enables you to have dependencies, but running on Core, we lost sight of what breaks might occur if we push new code,” said Josh.

A data engineering workflow—based on CI/CD—provides this oversight:

“We didn’t have CI/CD, nor did we have the time and resources to burn to build out a CI/CD framework on dbt Core,” said Josh.

Limitations of an on-premise system

Additionally, data developers working in dbt Core on their local machines lacked visibility on how their code would affect existing data infrastructure. Publishing code to production was therefore error-prone and difficult to troubleshoot.

“Analysts would go to Snowflake to run exploratory queries. After they had a good data source, they’d head to their local developer environment and commit to our on-prem Git. Then they’d hope it didn’t break anything,” explained Josh. “If it did, we’d have to dive into AWS to see the logs and try to understand what happened.”

This debugging process often took hours:

“I was happy if we managed to restore something the same day it broke,” winced Josh.

Deciding to move from dbt Core to dbt Cloud

Code42’s initial investment in dbt Core proved a success. But dashboard and view errors were increasing, and restoring them took longer each week:

“We kept experiencing quality issues because we'd make changes with unexpected downstream impacts,” said Josh. “Dashboard users were letting us know something was offline or incorrect before we even noticed. So we decided to move to dbt Cloud”.

Since dbt Core had already proved its value, the argument for investing in dbt Cloud was simple; with higher quality data and fewer maintenance costs, the data team would be more productive and deliver better revenue-impacting insights.

Time savings and higher quality data with dbt Cloud

Leveraging dbt Cloud’s Github-integrated IDE and out-of-the-box CI/CD, Josh’s team establish a new, scalable process for data development. The outcome: increased productivity and uptime.

“We got back 40 hours a week from time that was being spent maintaining models,” said Josh. “Now the team can provide higher quality dashboards, with way less effort—our dashboard uptime is now at 95%, whereas before the move to dbt Cloud, it hovered at 80%.”

Code stability and repeatability

By adding dbt Cloud to the stack, Code42’s team improved the consistency and stability of their data. Armed with observability tools—such as scheduling, logging and alerting—the team could spend less time on the maintenance of their data infrastructure.

“I no longer needed to worry about ‘When was this table last refreshed?’” shared Josh. “I could go look in my dbt runs and see.”

The issue of metric discrepancy from repeating the same SQL queries for different views was also resolved:

“Before, I had to copy and paste the same code into whole new queries. Now I could use the same views for product, sales, and customer success—an immense time saver.”

With these changes, the team could instead use their time to deliver more value:

“By focusing less on maintenance, we decrease mental load and increase our mental capacity,” explained Josh. “The team can focus on what they love and we can all be more productive.”

Insights for reducing churn and increasing expansion

With increased time and capacity, Code42’s data team built more dashboards with a direct impact on the company’s bottom line. As a SaaS business, their “life and breath” lies in understanding user engagement in-product:

“We built dashboards that identified patterns and recommended corresponding tactics to the sales and customer success teams,” said Josh. “For example, we predict customers likely to churn and those primed for expansion."

“It took us multiple iterations and data products for us to build these sophisticated dashboards,” said Josh. “We need to schedule multiple runs from different data sources in a designated order, join the outputs, and define the metrics. That is not something that’d be possible without dbt’s data development framework.“

Today, this is the product usage data that Code42’s product management team relies on to shape their roadmap.

Next for Code42: semantic layer and model contracts

Standardizing metrics with the dbt Semantic Layer

Post successful migration to dbt Cloud, Code42’s data team is now exploring more capabilities, like the dbt Semantic Layer, to help the data team predict what’s happening with leads:

“We’re starting to leverage metrics in machine learning and it’s all starting to come together,” said Josh. “We want to predict the win probability of a certain outcome and, then, use standardized metrics around marketing and sales to help inform the ML model."

With this approach, the data team would deepen its direct impact on revenue.

Leveraging model contracts for governed data

dbt Cloud enables developers to define a set of upfront “guarantees” that define the shape of your model, called “contracts”. This allows for further governance, by identifying whether a model’s transformation produces a dataset matching up with its contract. Code42 wants to explore this functionality to make it easier and safer to leverage shared models.

“Our centralized team manages many models, each with different contexts. If we can define a contract once, I can free my future self from having to remember the logic the next time I use that model. I’m guaranteed to build a better data product with less effort via CI/CD and contract enforcement.”

Advancing revenue use cases

The combination of a semantic layer, model contracts, and multi-project querying will enable the team to split their work into contexts—effectively defining data services so the team can deliver insights just-in-time for highly actionable, revenue-impacting metrics such as product usage and engagement data. “We believe we can improve our sales funnel by increasing data velocity,” explained Josh. “There are a number of sales dashboards that are not real-time today. We’ve begun to test how to decrease delivery time, and it’s already working really well.”