JetBlue’s data infrastructure is managed by a central data team. This team maintains the data warehouse, owns data transformation, and ensures that data used for reporting meets compliance standards. Over the past few years, as data volumes have grown exponentially, the centralized data team structure has begun to reach its limits–there is too much data for a single team to own.

“Centralized data teams are often asked to be a catch-all for data related issues. We deal with infrastructure issues, business logic issues, data quality issues, and we have to be honest with ourselves that we create bottlenecks,” said Ben Singleton, Director of Data Science & Analytics at JetBlue. “If we can let people take greater ownership of the data that they themselves are experts in, we’ll all be better off.”

From central ownership to shared collaboration

In Ben’s first week at JetBlue, he joined a meeting with a team that is one of the primary producers of analytic insights for the airline to discuss a number of data concerns. “My welcome to JetBlue involved a group of senior leaders making it clear that they were frustrated with the current state of data,” Ben said. “It made it real to me on day one that there was a lot of work to be done. That was my call to action.”

In Ben’s mind, the legacy approach had to go completely: “I knew that we couldn't just work on making our Microsoft SSIS pipelines incrementally better, or making configuration changes to our Microsoft parallel data warehouse to reduce our nightly eight hour maintenance window to six and a half hours. We needed to rebuild from the ground up.” Rebuilding included both data infrastructure and data workflows, with the goal of adopting a setup that eliminated the current data engineering bottleneck and gave data analysts and consumers at JetBlue a bigger role to play in owning their own data sets.

“Data engineers spend all of their time in data, but they’re not necessarily experts on how it’s used by different business functions to generate insights,” Ben said. He offers a seemingly innocuous example: the number of passengers on a plane. Simple, right? Not so fast. Do you mean the number of paying customers or number of seats occupied, which includes commuting crewmembers? Do you include infants in lap? Do you mean the total souls on board including the flight crew? What about pets?! The people with this nuanced understanding of the data frequently aren’t the data engineers–they are the analysts and decision-makers in specific business units.

“The only way we can support JetBlue in becoming a data-driven organization is if more people can participate in the data transformation process. My data team can’t scale fast enough to meet the needs of the company. We need to enable a distributed model of data management,” Ben said. The ability for an analyst to create the data assets they need to do their job is the secret to removing the data engineering bottleneck. If more people could contribute to data workflows, they would increase their own productivity while also reducing the burden on Ben’s team.

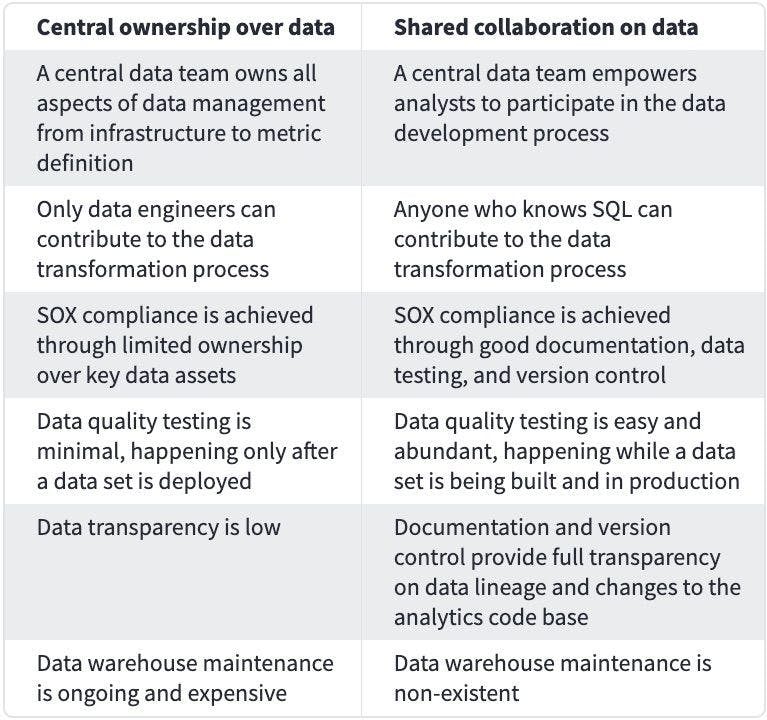

A democratized approach to data access increases productivity, but more people involved in the data development process also raises concerns about risk: “There are security considerations, Sarbanes–Oxley Act (“SOX”) compliance requirements, and risks to internal systems throughout the development process.” Ben said. The answer? Do both. The status quo just wasn’t an option. “We don’t have much of a choice. Given the challenges we're facing as an airline during an unprecedented global pandemic, we have to do more and be more efficient with less. Not only do we support regulatory reporting requirements, we need to enable critical decision-making that will ensure our recovery from this crisis. We can’t give up on or deprioritize data. We have to modernize, and we’re doing it quickly.”

Central ownership over data Shared collaboration on data A central data team owns all aspects of data management from infrastructure to metric definition A central data team empowers analysts to participate in the data development process Only data engineers can contribute to the data transformation process Anyone who knows SQL can contribute to the data transformation process SOX compliance is achieved through limited ownership over key data assets SOX compliance is achieved through good documentation, data testing, and version control Data quality testing is minimal, happening only after a data set is deployed Data quality testing is easy and abundant, happening while a data set is being built and in production Data transparency is low Documentation and version control provide full transparency on data lineage and changes to the analytics code base Data warehouse maintenance is ongoing and expensive Data warehouse maintenance is non-existent

Choosing Snowflake + dbt

“I came to JetBlue knowing that introducing Snowflake and dbt would be the right game-changing move. I had bought into the vision that Tristan Handy and other folks in the dbt community articulated about modern data warehousing and the analytics engineering workflow. I still had to validate the technology and ensure it would meet JetBlue’s needs, but I trusted the approach.” Ben said.

Ben saw dbt and Snowflake as the perfect fit for helping JetBlue adopt a more modern, collaborative data workflow that would eliminate the current data engineering bottleneck. Together, these tools enabled his team to overcome obstacles familiar to any data team as well as some particularly sticky problems unique to a publicly-traded, Fortune 500 company working in a highly regulated industry:

1. Ensuring compliance and security

All publicly traded companies need to comply with the Sarbanes-Oxley Act. An important part of SOX compliance, particularly for data teams, is to ensure that code is reviewed and data is validated when changes are promoted from development environments to production. Because dbt is built to leverage the git workflow, this process becomes much simpler. “Version controlled code ensures that changes can be implemented following audit-approved processes. This is a new workflow to most analysts, but they are often very willing to adopt new processes if it means gaining greater control over their data.” Ben said.

2. Delivering real-time data

JetBlue has two classes of data: batch data delivered at various intervals and real-time operational data. “Airlines can succeed or fail based on how they respond in a snowstorm,” Ben said. “JetBlue’s data infrastructure not only needs to help business leaders make long-term decisions, it needs to help crewmembers in system operations decide whether or not to keep the doors open an extra few minutes to accommodate a delayed connecting flight. These are high-pressure situations that require real-time data.”

“People might think of dbt as a tool that only supports batch workflows which update a few times a day or once daily, but dbt can be used for any kind of data transformation in your warehouse, including real-time use cases,” Ben said. To pull this off, the team used dbt to implement lambda views, which union historical data with the most current view of the data in Snowflake.

Lambda views provide real-time access to flight information, bookings, ticketing, and check-in’s, among several other data sources–the data that ultimately makes or breaks the customer experience. “Delays in operational data result in suboptimal decisions, and one suboptimal decision across a network of a thousand flights a day can disproportionally affect the customer experience and be expensive,” Ben said. “Traveling can be stressful, especially in the time of coronavirus. We want to be really smart about using data to improve that experience.”

3. Catching data quality issues before they impact end users

“Previously, we monitored our data transformation jobs, but we didn’t actually monitor our data,” Ben said. They could tell if a job failed, but they couldn’t tell if the correct data was coming through. “Missing data is inevitable, but the data team should be the first to catch it. Incorrect data is not only embarrassing, but can be costly. We frequently weren’t aware that something broke until our users told us.”

dbt makes testing easy, and as a result, people write more tests. “dbt makes testing part of the development process. It’s just part of the work you do every day, so it doesn’t feel like an extra burden,” Ben said. And this daily work adds up. More testing improves data quality, data quality improves trust, and trust creates a happier and more productive data team. “Catching data quality issues before your users helps the culture of your team. No one likes to have fingers pointed at them. It’s an overlooked benefit of dbt–what it can do for morale,” Ben said.

4. Implementing a workflow that was accessible for analysts

Data availability was an urgent problem for JetBlue. Data pipeline jobs took eight hours to run, and during that time, the data warehouse was unavailable to analysts because the system couldn’t simultaneously handle the load of maintenance and user queries. “Our availability hovered around 65%,” Ben said. “In an age where people expect 99.9% uptime, 65% doesn’t remotely cut it anymore.” The legacy infrastructure required frequent repairs as the team worked to maintain pipeline jobs that were written 5, 10, or 15 years ago: “We had to watch our Microsoft SSIS transformation pipelines and our APS warehouse jobs because they frequently and unexpectedly failed throughout the night.” All of this was a result of the explosion of data that occurred in the last five years. With Snowflake, a data platform built for modern data volumes, these maintenance windows are virtually non-existent, meaning data is available to analysts anytime they need it.

“My hope is that by making our data really reliable and solid, we can get more people interested in asking questions of the data and getting their hands dirty,” Ben said. “We want our Excel power users to be motivated to learn SQL, and our SQL users to get inside the warehouse and build data models. We want our decision-makers to feel comfortable building their own dashboards and getting answers for themselves without always having to rely on analysts, even to answer basic questions.” Self-service success is dependent on accessible tooling.

The first part of the Snowflake and dbt migration involved the data engineering team migrating existing data transformations. With a solid foundation in place, the team is ready to roll-out the new workflow to analysts with dbt Cloud. Ben is betting on dbt Cloud, which enables analysts to orchestrate the analytics engineering workflow from their browser, making data modeling accessible for analysts. “Our first group of 20 analysts have been trained on Snowflake and exposed to the data warehousing concepts guiding our approach, and the next step in the plan is to have interested data analysts trained on dbt Cloud.”

5. Improving data transparency and documentation

Airlines rely on a large number of specialized systems, most of which generate data that are analyzed to improve efficiencies. When Ben first began to explore JetBlue’s data warehouse, he found it difficult to answer even basic questions because he didn’t know what a column name represented, what source system created a data set, or how a metric was calculated. “We need to approach data warehousing like any other product IT might develop. It needs to be intuitive, well-organized, and simple,” Ben said.

Creating transparency in the warehouse starts with naming conventions–“No more abbreviations or acronyms.”–but also encompasses documentation. dbt documentation includes information about a project including model code and tests, information about the warehouse like column data types and table size, as well as descriptions that have been added to models, columns, and sources. “Documentation is important for newcomers, and also important for getting advanced analysts involved in the data transformation process.”

The journey toward data democratization

In the first stage of the migration, the data engineering team migrated 26 data sources to Snowflake and dbt. These data sets represented JetBlue’s most critical operational and customer data. In the next three months, Ben anticipates that this number will double. “We’ll be focused on building out our customer data models to provide enhanced ‘Customer 360’ insights. We have a fantastic loyalty program that we’re aggressively working to expand. My team is very excited to support those efforts through data,” Ben said.

Ben’s hope is that with Snowflake and dbt his team will be able to solve the frustration he heard in his first week at JetBlue and achieve a 100% improvement in analyst NPS. “I’ve been the angry analyst frustrated with the data engineering team in previous jobs. Now I’m the person who analysts will be pointing fingers at if they’re not happy!” Ben said. “So my team is working extremely hard to provide a world-class data stack and regain the trust of our analyst community. I’m confident it’s going to be a radical transformation. The new workflow with dbt and Snowflake isn’t a small improvement. It’s a complete redesign of our entire approach to data that will establish a new strategic foundation for analysts at JetBlue to build on.”